1.5 KiB

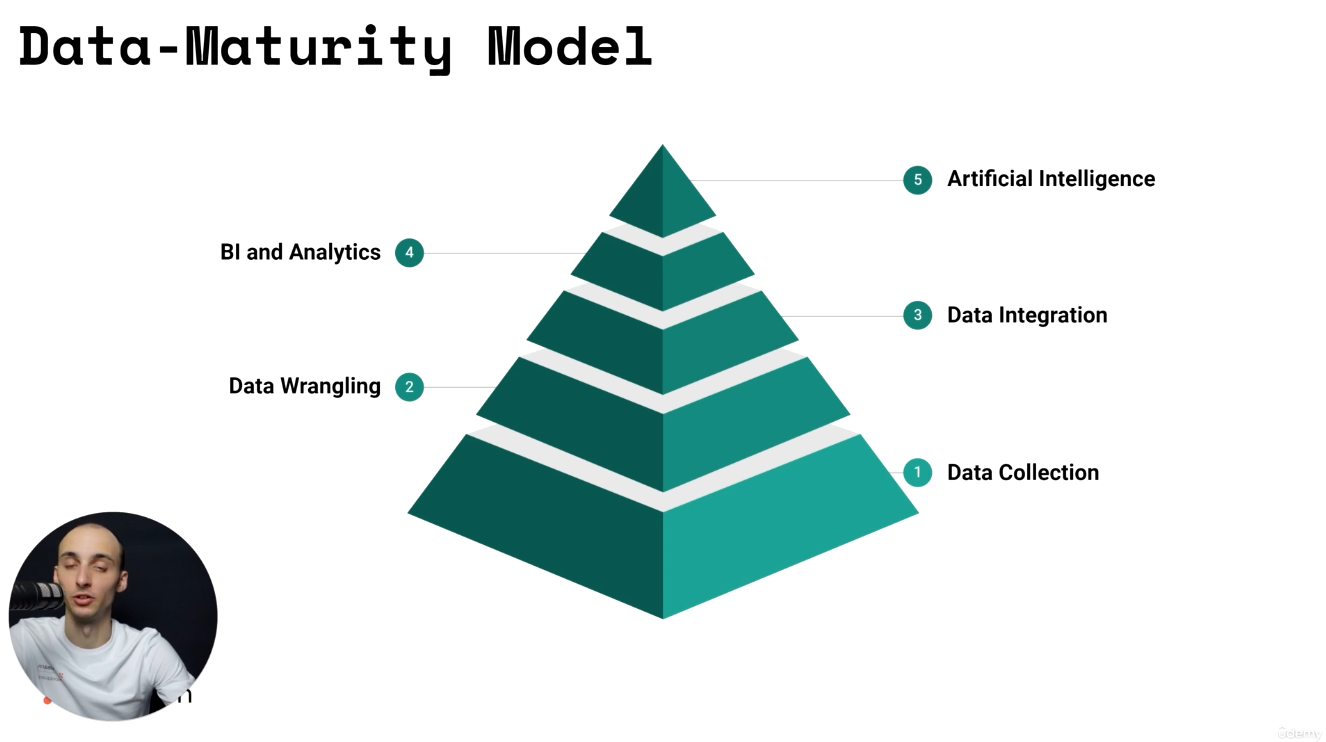

Data Maturity Model

Maslow hierarchy of needs, data version:

Data collection

You get data from places.

You place it as is in some staging area.

Data wrangling

Cleaning stuff up, deduplicating, yadi yada.

Data integration

Place everything together nicely in the same place in a usable model.

ETL

ETL made sense before. Storage was expensive and you only wanted to load the strictly necessary data into the target DWH.

But this comes with problems:

- You have a lot of statefulness.

- Debugging and testing pipelines is a pain in the ass.

- You need to do transformations outside of your target database.

- Schema changes were nightmares.

ELT is the new shiny toy:

- We read raw data from source system and load it into our DWH/Data Lake.

- We do our transformations in the target system.

- Schema changes become much more manageable.

Datawarehouses and data lakes

Datawarehouse

DWH -> Any database that:

- We use to ground our BI and reporting on.

- Optimized for reads.

- Typically structured in facts and dimensions.

Data Lake

Decouple storage from compute. Use S3/Blob Storage/Hadoop HDFS for storage. Everything gets stored as files. Use a separate query engine, like Athena, Trino, Spark.

Data Lakehouse

Just use both. A data lake with some DWH layer on top. Pretty much, a swamp of files with some governance, modelling tool sitting on top of it to control access and ease queries.